How to Read CSV in Polars Explained

Published on

Polars, a swift DataFrame library in Python, provides a familiar and powerful interface for processing structured data. Especially when dealing with CSV files, Polars shines by offering straightforward methods, such as read_csv() and scan_csv(), reminiscent of the ones in pandas. Let's learn how to make the most out of them.

Importing CSV with read_csv()

Polars provides an easy-to-use method read_csv() for importing CSV files. Its syntax is strikingly similar to pandas, which eases the transition for those accustomed to the latter. Here's a quick demonstration:

import polars as pl

# Read CSV with read_csv

df = pl.read_csv('data_sample.csv')

print(df)Output:

shape: (3, 4)

┌───────────┬───────┬─────┬───────────────────┐

│ studentId ┆ Name ┆ Age ┆ FirstEnrollment │

│ --- ┆ --- ┆ --- ┆ --- │

│ i64 ┆ str ┆ i64 ┆ str │

╞═══════════╪═══════╪═════╪═══════════════════╡

│ 1 ┆ Mike ┆ 24 ┆ 2020-01-17 │

│ 2 ┆ Sarah ┆ 33 ┆ 2021-07-23 │

│ 3 ┆ John ┆ 19 ┆ 2022-12-20 │

└───────────┴───────┴─────┴───────────────────┘Parsing Dates with read_csv()

While importing CSV files, one might want to parse certain columns as date-time objects. This functionality is enabled by the parse_dates=True parameter. For instance:

# Read CSV with date parsing

df_dates = pl.read_csv('data_sample.csv', parse_dates=True)

print(df_dates)Changing Column Types On-the-fly

One can also convert the data type of specific columns while reading the file. For instance, here's how to cast the 'Age' column from i64 to Int32:

# Read CSV with a specific data type

df_age_casted = pl.read_csv('data_sample.csv', parse_dates=True).with_column(pl.col('Age').cast(pl.Int32))

print(df_age_casted)One advantage Polars holds over pandas is its ability to reveal column types when displaying a DataFrame, making data type troubleshooting much easier.

Optimizing Reads with scan_csv()

For large datasets, Polars offers a more resource-efficient method - scan_csv(). By leveraging lazy evaluation, this method loads data into memory only when necessary (i.e., when collect() is called), potentially reducing memory overhead. Here's a quick look:

# Efficient read with scan_csv

q = pl.scan_csv('data_sample.csv')

df_lazy = q.collect()

print(df_lazy)Remember to call collect() to execute the operations, otherwise, a representation of the operations (plan) is returned instead.

These are just a few of the ways Polars can be used to interact with CSV files. Its clear syntax and powerful functions make it an excellent tool for data manipulation in Python.

Understanding Polars Expressions and Actions

Polars supports both eager and lazy operations. In eager mode, computations are executed immediately, while in lazy mode, operations are queued and evaluated only when needed, optimizing for efficiency and memory usage.

Employing Lazy Evaluation with scan_csv()

With scan_csv(), the data isn't loaded into memory right away. Instead, Polars constructs a query plan which includes the operations to be performed. This query plan gets executed only when collect() is called. This technique, known as lazy evaluation, can result in more efficient memory usage and faster computation, especially with large datasets. Here's an example:

# Lazy read with scan_csv

query = pl.scan_csv('data_sample.csv')

print(query)Executing the above code results in a summary of the "plan" of operations, but the operations themselves are not yet executed.

naive plan: (run LazyFrame.describe_optimized_plan() to see the optimized plan)

CSV SCAN data_sample.csv

PROJECT */4 COLUMNSTo execute this plan and load the data into memory, you need to use the collect() method:

df_lazy = query.collect()

print(df_lazy)More Than Just Reading CSVs: Data Manipulation

Beyond just reading CSVs, Polars offers a comprehensive suite of data manipulation functions that align well with pandas operations. This makes Polars an efficient and versatile tool for data analysis. Whether you're filtering data, applying transformations, or aggregating information, Polars can handle it all with great performance.

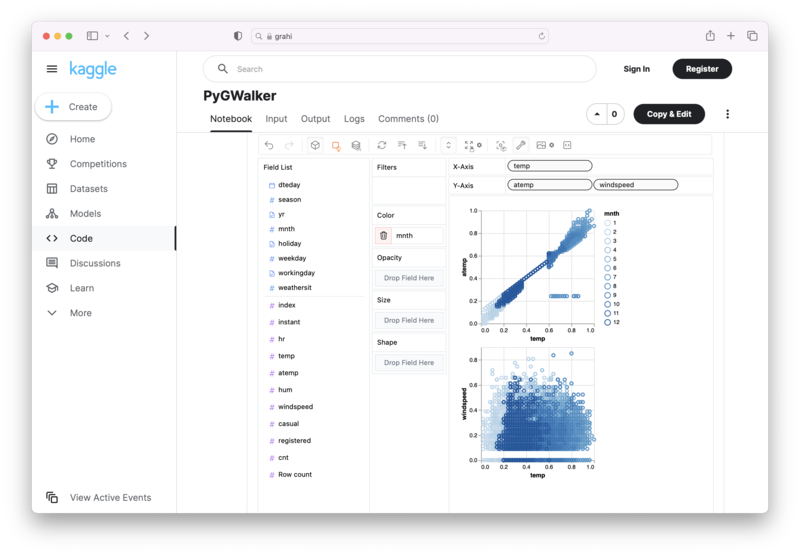

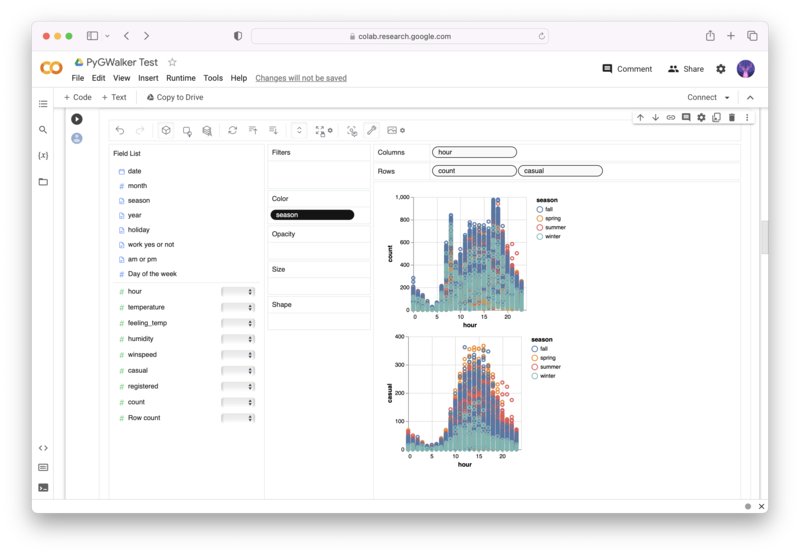

Visualize Your Polars Dataframe with PyGWalker

PyGWalker (opens in a new tab) is an Open Source python library that can help you create data visualization from your Polars dataframe with ease.

No need to complete complicated processing with Python coding anymore, simply import your data, and drag and drop variables to create all kinds of data visualizations! Here's a quick demo video on the operation:

Here's how to use PyGWalker in your Jupyter Notebook:

pip install pygwalker

import pygwalker as pyg

gwalker = pyg.walk(df)Alternatively, you can try it out in Kaggle Notebook/Google Colab:

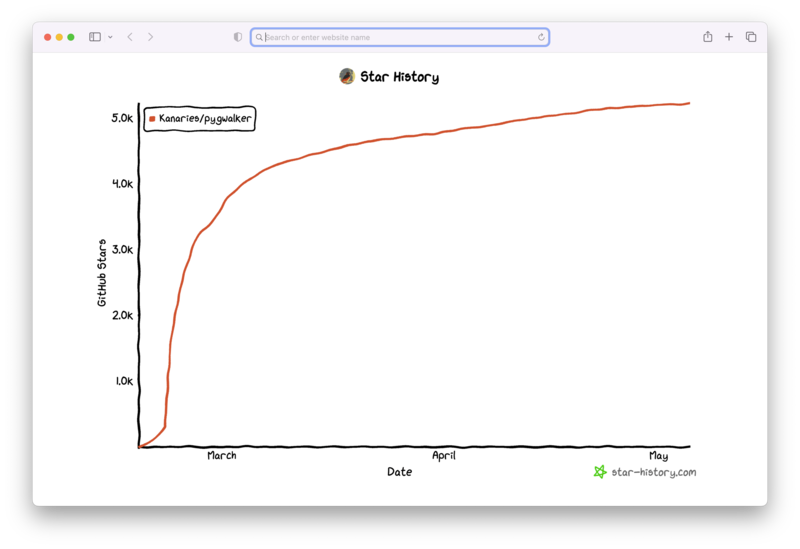

PyGWalker is built on the support of our Open Source community. Don't forget to check out PyGWalker GitHub (opens in a new tab) and give us a star!

FAQs:

While navigating through Polars, you might encounter some questions. Here are some common ones:

- What is the main difference between the

read_csv()andscan_csv()functions in Polars?

read_csv() is a straightforward function that reads a CSV file and loads the entire data into memory. On the other hand, scan_csv() operates lazily, meaning it doesn't load the data until collect() is called. This makes scan_csv() more efficient when working with large datasets, as it only loads necessary data into memory.

- Can Polars handle date parsing while reading CSV files?

Yes, Polars can handle date parsing. When using the read_csv() function, simply set the parse_dates=True argument, and Polars will attempt to parse columns with date information automatically.

- Can I change the data type of specific columns while reading a CSV file?

Absolutely. Polars allows you to modify column data types during the CSV file reading process. You can use the with_columns() method coupled with the cast() function to achieve this. For instance, df = pl.read_csv('data.csv').with_columns(pl.col('Age').cast(pl.Int32)) will change the 'Age' column to Int32.